Exploring New Frontiers: How Apache Flink, Apache Hudi and Presto Power New Insights and Gold Nuggets at Scale

Danny Chan & Sagar Sumit, Onehouse – In this talk, attendees will walk away with: – The current challenges of analytics on transactional data systems with data streams at scale – How the Hudi unlocks incremental processing on the lake – How Presto allows ad-hoc queries that support data exploration on Flink data – How you can leverage Flink, Hudi and Presto to build incremental materialized views

How to Speed up your Lakehouse Queries by an Order of Magnitude with Multi-modal Index Subsystem using Apache Hudi and Presto

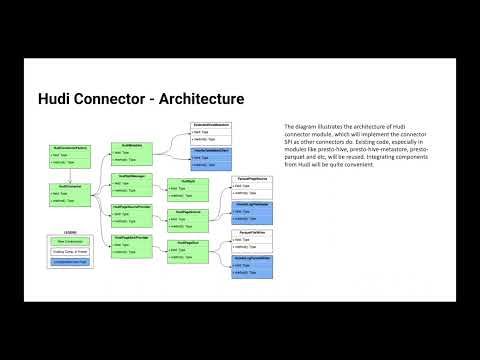

Sivabalan Narayanan of Onehouse shares more about how Apache Hudi brought transactions, incremental processing on top of data lakes, which are deemed as the foundational pillars for Lakehouse architecture. In this session, we will discuss Apache Hudi and how it fills the key technology gaps in the modern data architecture. Viewed from a data engineering lens, Hudi also plays a key unifying role between the batch and stream processing worlds realized by incremental processing model. We will take a look at the capabilities of native Hudi connector in Presto. We will dive deep into this connector, covering the key optimizations and features it unblocks. Presto users could now leverage the metadata table for optimized file listing and avoid large number of list operations in cloud storages. We will look at how we can improve the query latency in Presto using advanced data skipping methodogies employed with multi-modal sub-system with Hudi.

Presto and Apache Hudi

In this talk we are going to introduce Hudi, discuss different table/query types and how Hudi integrates with Presto to support these queries. We like to share our experience on how this integration has evolved over time and also discuss upcoming file listing and query planning improvements in Presto Hudi queries.

PrestoDB and Apache Hudi for the Lakehouse – Sagar Sumit & Bhavani Sudha Saktheeswaran

Apache Hudi is a rich platform to build self-managing, exabyte-scale data lakes, optimized for incremental as well as regular batch processing. Hudi tables can be seamlessly synced to Hive metastore, which unlocks the powerful capabilities of Presto engine via the Hive connector. Presto-Hudi integration is over five years old. What started as simply fetching splits using a custom input format for a Hudi Copy-On-Write table has evolved into snapshot querying of Merge-On-Read tables and using Hudi’s internal metadata table to boost query performance. In this session, we trace that journey and discuss in detail the recent developments that have made this integration stronger not only in terms of usability but also performance. We discuss the additional features that come with the brand new presto-hudi connector, such as multi-modal index and data skipping for better query performance.

Speeding up Presto Queries Using Apache Hudi Clustering – Satish Kotha & Nishith Agarwal, Uber

Apache Hudi is a data lake platform that supercharges data lakes. Originally created at Uber, Hudi provides various ways to strike trade-offs between ingestion speed and query performance by supporting user defined partitioners, automatic file sizing which are favorable to query performance. Hudi integrates with PrestoDB to make this data available for queries. During ingestion, data is typically co-located based on arrival time. However, query engines perform better when the data frequently queried is co-located together, which may be different from arrival time order. We will discuss a new framework called “data clustering” to make data lakes adaptable to query patterns, thereby improving query latencies. Finally, we will discuss future work to support improving data locality using custom bucketing of data during ingestion, avoiding some of the rewrite costs.