Implementing Lakehouse Architecture with Presto at Bolt – Kostiantyn Tsykulenko, Bolt.eu

Bolt.eu is the first European mobility super-app. We have over 100M users across Europe and Africa and have to deal with data at a large scale on a daily basis (over 100k queries daily). Previously we were using a traditional data warehouse solution based on Redshift but we’ve faced scalability issues that were hard to overcome and after doing our research we chose Presto as the solution. In just a single year we’ve managed to migrate to the Lakehouse architecture using AWS, Presto, Spark and Delta lake. We would like to talk about our journey, some of the challenges we’ve encountered and how we solved them.

Exploring New Frontiers: How Apache Flink, Apache Hudi and Presto Power New Insights and Gold Nuggets at Scale

Danny Chan & Sagar Sumit, Onehouse – In this talk, attendees will walk away with: – The current challenges of analytics on transactional data systems with data streams at scale – How the Hudi unlocks incremental processing on the lake – How Presto allows ad-hoc queries that support data exploration on Flink data – How you can leverage Flink, Hudi and Presto to build incremental materialized views

The Future of Presto’s Query Optimizer – Bill McKenna, Ahana

In this talk, you will hear from the query optimizer OG himself, Bill McKenna (Principal software engineer at Ahana, Architect for the query optimizer that became the code base of the Amazon Redshift query optimizer, and co-author of The Volcano Optimizer Generator: Extensibility and Efficient Search) go into detail about the state of modern query optimizers, and how Presto stacks up against them and where it will go in the near future. If database theory is your jam, you won’t want to miss this deeply technical presentation from one of the pioneers in the field.

How to Speed up your Lakehouse Queries by an Order of Magnitude with Multi-modal Index Subsystem using Apache Hudi and Presto

Sivabalan Narayanan of Onehouse shares more about how Apache Hudi brought transactions, incremental processing on top of data lakes, which are deemed as the foundational pillars for Lakehouse architecture. In this session, we will discuss Apache Hudi and how it fills the key technology gaps in the modern data architecture. Viewed from a data engineering lens, Hudi also plays a key unifying role between the batch and stream processing worlds realized by incremental processing model. We will take a look at the capabilities of native Hudi connector in Presto. We will dive deep into this connector, covering the key optimizations and features it unblocks. Presto users could now leverage the metadata table for optimized file listing and avoid large number of list operations in cloud storages. We will look at how we can improve the query latency in Presto using advanced data skipping methodogies employed with multi-modal sub-system with Hudi.

Build & Query Secure S3 Data Lakes with Ahana Cloud and AWS Lake Formation

AWS Lake Formation is a service that allows data platform users to set up a secure data lake in days. Creating a data lake with Presto and AWS Lake Formation is as simple as defining data sources and what data access and security policies you want to apply. In this talk, Wen will walk through the recently announced AWS Lake Formation and Ahana integration.

Running PrestoDB on Kubernetes with Ahana Cloud and AWS EKS

PrestoDB is built to be cloud agnostic and container-friendly, but getting it to run on Kubernetes in the cloud can be challenging. In this talk, Gary Stafford (AWS) and Dipti Borkar (Ahana) will discuss: Why use the in-VPC deployment model with AWS and demo, etc – Deploying PrestoDB on AWS EKS using the Ahana Cloud managed service within the user’s AWS account.

Presto and Apache Hudi

In this talk we are going to introduce Hudi, discuss different table/query types and how Hudi integrates with Presto to support these queries. We like to share our experience on how this integration has evolved over time and also discuss upcoming file listing and query planning improvements in Presto Hudi queries.

Building the Presto Open Source Community – Ahana Round Table

In this round table moderated by Eric Kavanagh of The Bloor Group, panelists from Uber, Facebook, Ahana, and Alibaba will discuss all aspects of building a thriving open source community around PrestoDB including why Presto is so popular & the problems it solves, the open source model the foundation follows, why governance and transparency are so important to an open source community, and what the community looks for in open source projects.

Free-Forever Managed Service for Presto for your Cloud-Native Open SQL Lakehouse – Wen Phan, Ahana

Getting started with a do-it-yourself approach to standing up an open SQL Lakehouse can be challenging and cumbersome. Ahana Cloud Community Edition dramatically simplifies it and gives you the ability to learn and validate Presto for your open SQL Lakehouse—for free. In this session, we’ll show you how easy it is to register for, stand up, and use the Ahana Cloud Community Edition to query on top of your Lakehouse.

How Blinkit is Building an Open Data Lakehouse with Presto on AWS – Satyam Krishna & Akshay Agarwal

Blinkit, India’s leading instant delivery service, uses Presto on AWS to help them deliver on their promise of “everything delivered in 10 minutes”. In this session, Satyam and Akshay will discuss why they moved to Presto on S3 from their cloud data warehouse for more flexibility and better price performance. They’ll also share more on their open data lakehouse architecture which includes Presto as their SQL engine for ad hoc reporting, Ahana as SaaS for Presto, Apache Hudi and Iceberg to help manage transactions, and AWS S3 as their data lake.

Query Execution Optimization for Broadcast Join using Replicated-Reads Strategy – George Wang, Ahana

Today presto supports broadcast join by having a worker to fetch data from a small data source to build a hash table and then sending the entire data over the network to all other workers for hash lookup probed by large data source. This can be optimized by a new query execution strategy as source data from small tables is pulled directly by all workers which is known as replicated reads from dimension tables. This feature comes with a nice caching property given that all worker nodes N are now participating in scanning the data from remote sources. The table scan operation for dimension tables is cacheable per all worker nodes. In addition, there will be better resource utilization because the presto scheduler can now reduce the number plan fragment to execute as the same workers run tasks in parallel within a single stage to reduce data shuffles.

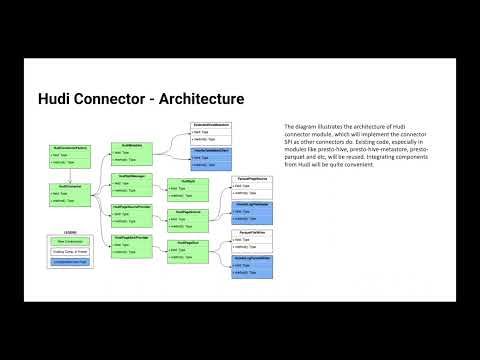

PrestoDB and Apache Hudi for the Lakehouse – Sagar Sumit & Bhavani Sudha Saktheeswaran

Apache Hudi is a rich platform to build self-managing, exabyte-scale data lakes, optimized for incremental as well as regular batch processing. Hudi tables can be seamlessly synced to Hive metastore, which unlocks the powerful capabilities of Presto engine via the Hive connector. Presto-Hudi integration is over five years old. What started as simply fetching splits using a custom input format for a Hudi Copy-On-Write table has evolved into snapshot querying of Merge-On-Read tables and using Hudi’s internal metadata table to boost query performance. In this session, we trace that journey and discuss in detail the recent developments that have made this integration stronger not only in terms of usability but also performance. We discuss the additional features that come with the brand new presto-hudi connector, such as multi-modal index and data skipping for better query performance.

Panel Discussion: Presto for the Open Data Lakehouse

Today’s digital-native companies need a modern data infra that can handle data wrangling and data-driven analytics for the ever-increasing amount of data needed to drive business. Specifically, they need to address challenges like complexity, cost, and lock-in. An Open SQL Data Lakehouse approach enables flexibility and better cost performance by leveraging open technologies and formats. Join us for this panel where leading technologists from the Presto open source project will share their vision of the SQL Data Lakehouse and why Presto is a critical component.