Keynote Panel: Presto at Scale – Shradha Ambekar, Gurmeet Singh, Neerad Somanchi & Rupa Gangatirkar

Over the last decade Presto has become one of the most widely adopted open source SQL query engines. In use at companies large and small, Presto’s performance, reliability, and efficiency at scale have become critical to many companies’ data infrastructures. In this panel we’ll hear from three of the largest companies running Presto at scale – Meta, Uber, and Intuit. They’ll share more about their learnings, some of their impressive performance metrics with Presto, and what they envision going forward for Presto at their respective companies.

Exploring New Frontiers: How Apache Flink, Apache Hudi and Presto Power New Insights and Gold Nuggets at Scale

Danny Chan & Sagar Sumit, Onehouse – In this talk, attendees will walk away with: – The current challenges of analytics on transactional data systems with data streams at scale – How the Hudi unlocks incremental processing on the lake – How Presto allows ad-hoc queries that support data exploration on Flink data – How you can leverage Flink, Hudi and Presto to build incremental materialized views

How to Speed up your Lakehouse Queries by an Order of Magnitude with Multi-modal Index Subsystem using Apache Hudi and Presto

Sivabalan Narayanan of Onehouse shares more about how Apache Hudi brought transactions, incremental processing on top of data lakes, which are deemed as the foundational pillars for Lakehouse architecture. In this session, we will discuss Apache Hudi and how it fills the key technology gaps in the modern data architecture. Viewed from a data engineering lens, Hudi also plays a key unifying role between the batch and stream processing worlds realized by incremental processing model. We will take a look at the capabilities of native Hudi connector in Presto. We will dive deep into this connector, covering the key optimizations and features it unblocks. Presto users could now leverage the metadata table for optimized file listing and avoid large number of list operations in cloud storages. We will look at how we can improve the query latency in Presto using advanced data skipping methodogies employed with multi-modal sub-system with Hudi.

The Past, Present, and Future of Presto – Philip Bell, Meta

PrestoDB recently underwent major architectural updates as the Presto Foundation grows membership and is looking to vastly grow the number of new commits and forks. Achieving this desired end state required successful refactoring and improving of Presto’s already impressive speed, efficiency, reliability, and extensibility. Establishing PrestoDB as a premier Open Source project required a major commitment of time and resources from Meta to ensure the community can benefit from this project for years to come, as well as positioning PrestoDB to evolve beyond what Meta alone could create. Members of the Presto Foundation need more of you to be involved in this major evolution in Presto’s history and core components, and bring your own inventive ideas to the mix.

Presto at Meta: A Guide to Tuning Clusters at Enormous Scale

Facebook operates Presto at an enormous scale. A critical part of the success of Presto is properly tuning the clusters according to the use case they target. Swapnil Tailor, Basar Onat and Tim Meehan describe important session properties and configuration properties used to configure Presto, and guidance on when and how to use them.

Presto and Apache Hudi

In this talk we are going to introduce Hudi, discuss different table/query types and how Hudi integrates with Presto to support these queries. We like to share our experience on how this integration has evolved over time and also discuss upcoming file listing and query planning improvements in Presto Hudi queries.

Presto on Spark – Facebook – Virtual Meetup

At Facebook, we have spent the past several years in independently building and scaling both Presto and Spark to Facebook scale batch workloads. It is now increasingly evident that there is significant value in coupling Presto’s state-of-art low-latency evaluation with Spark’s robust and fault tolerant execution engine. In this talk, we’ll take a deep dive in Presto and Spark architecture with a focus on key differentiators (e.g., disaggregated shuffle) that are required to further scale Presto.

Building the Presto Open Source Community – Ahana Round Table

In this round table moderated by Eric Kavanagh of The Bloor Group, panelists from Uber, Facebook, Ahana, and Alibaba will discuss all aspects of building a thriving open source community around PrestoDB including why Presto is so popular & the problems it solves, the open source model the foundation follows, why governance and transparency are so important to an open source community, and what the community looks for in open source projects.

Common Sub Expression Optimization at Facebook

In complex analytics queries, we often see repeated expressions, for example parsing the same JSON column but extracting different fields, elaborate CASE statement with common predicates and different ones. Previously, Presto will compute the same expression many times as they appear in query. With common sub expression optimization, we would only evaluate the same expression once within the same project operator or filter operator. In our workload, we’ve seen 3x improvements on certain queries with expensive common sub expressions like JSON_PARSE. Microbenchmark also shows a consistent ~10% performance improvement for simple common sub-expressions like x + y. In this talk, we will talk about how this is implemented.

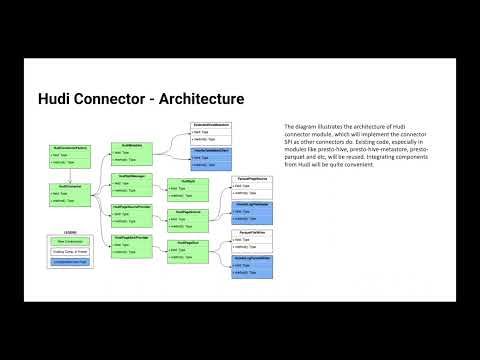

PrestoDB and Apache Hudi for the Lakehouse – Sagar Sumit & Bhavani Sudha Saktheeswaran

Apache Hudi is a rich platform to build self-managing, exabyte-scale data lakes, optimized for incremental as well as regular batch processing. Hudi tables can be seamlessly synced to Hive metastore, which unlocks the powerful capabilities of Presto engine via the Hive connector. Presto-Hudi integration is over five years old. What started as simply fetching splits using a custom input format for a Hudi Copy-On-Write table has evolved into snapshot querying of Merge-On-Read tables and using Hudi’s internal metadata table to boost query performance. In this session, we trace that journey and discuss in detail the recent developments that have made this integration stronger not only in terms of usability but also performance. We discuss the additional features that come with the brand new presto-hudi connector, such as multi-modal index and data skipping for better query performance.

Presto & the Foundations of Open Lake House: Trends & Opportunities – Biswapesh Chattopadhyay, Meta

Building open and shared foundational tech to build a lake house architecture can provide the best-of-breed user experience across the Analytics and ML domains and potentially beyond. In this talk, Biswa will share examples drawn from the evolution of the data stack at Meta over the last few years including efforts towards dialect unification (Sapphire aka Presto-on-Spark and Xstream-IE streaming engine efforts), eval unification (using Velox as the base), eliminating the need for data duplication for interactive analytics by building smart caching (RaptorX), building a best-of-breed file format that works across Analytics and ML (Alpha), and building an open source ML data pre-proc engine (TorchArrow) which shares the core dialect and eval components with Presto.

Panel Discussion: Presto for the Open Data Lakehouse

Today’s digital-native companies need a modern data infra that can handle data wrangling and data-driven analytics for the ever-increasing amount of data needed to drive business. Specifically, they need to address challenges like complexity, cost, and lock-in. An Open SQL Data Lakehouse approach enables flexibility and better cost performance by leveraging open technologies and formats. Join us for this panel where leading technologists from the Presto open source project will share their vision of the SQL Data Lakehouse and why Presto is a critical component.

Presto On Spark: Scaling not Failing with Spark – Ariel Weisberg, Meta & Shradha Ambekar, Intuit

Presto on Spark is an integration between Presto and Spark that leverages Presto’s compiler/evaluation as a library and Spark’s large scale processing capabilities. It enables a unified SQL experience between interactive and batch use cases. A unified option for batch data processing and ad hoc is very important for creating the experience of queries that scale instead of fail without requiring rewrites between different SQL dialects. In this session, we’ll talk about Presto On Spark architecture, why it matters and its implementation/usage at Intuit.

Presto on Elastic Capacity – Neerad Somanchi & Abhisek Saikia, Meta

Presto on elastic capacity – Elasticity of a shared fleet is one of the fundamental pillars of the IaaS (Infrastructure-as-a-Service) world. The ability of services to efficiently use both guaranteed and non-guaranteed (opportunistic) capacity is important in such a setting. Presto is great when it runs on guaranteed capacity (i.e, capacity that is fixed and stable). But what if we want Presto to leverage elastic (opportunistic) capacity, i.e, capacity that is shifting, but in a predictable manner (think Amazon EC2 Spot Blocks)? In this lightning presentation, Neerad Somanchi and Abhisek Saikia will talk about how a recent feature developed for Presto can help it efficiently utilize such elastic compute.