Keynote Panel: Presto at Scale – Shradha Ambekar, Gurmeet Singh, Neerad Somanchi & Rupa Gangatirkar

Over the last decade Presto has become one of the most widely adopted open source SQL query engines. In use at companies large and small, Presto’s performance, reliability, and efficiency at scale have become critical to many companies’ data infrastructures. In this panel we’ll hear from three of the largest companies running Presto at scale – Meta, Uber, and Intuit. They’ll share more about their learnings, some of their impressive performance metrics with Presto, and what they envision going forward for Presto at their respective companies.

Presto on ARM – Chunxu Tang & Jiaming Mai, Alluxio

Traditionally, the deployment of Presto has been limited to Intel processors with the x86 architecture. However, with the growing popularity of ARM architecture, Chunxu and Jiaming have extended the Presto ecosystem to ARM and conducted a series of benchmark experiments. Their objective is to evaluate the performance of Presto on ARM architecture and identify key insights from the experiments. In this presentation, Chunxu and Jiaming will share the results of their performance evaluation and discuss some of the most significant findings from their research.

Speeding Up Presto in ByteDance – Shengxuan Liu, Bytedance & Beinan Wang, Alluxio

Shengxuan Liu from ByteDance and Beinan Wang from Alluxio will present the practical problems and interesting findings during the launch of Presto Router and Alluxio Local Cache. Their talk covers how ByteDance’s Presto team implements the cache invalidation and dashboard for Alluxio’s Local Cache. Shengxuan will also share his experience using a customized cache strategy to improve the cache efficiency and system reliability.

Scaling Cache for Presto Iceberg Connector – Beinan Wang, Alluxio & Chunxu Tang

While using the Presto Iceberg connector, the in-heap cache in Presto is likely overloaded. In this talk, Beinan and Chunxu will share the design, implementation, and optimization of the off-heap cache to address the scalability challenges. You will learn how to cache Iceberg data and metadata for the Presto Iceberg connector, followed by future work on improving table scans using Apache Arrow.

The Past, Present, and Future of Presto – Philip Bell, Meta

PrestoDB recently underwent major architectural updates as the Presto Foundation grows membership and is looking to vastly grow the number of new commits and forks. Achieving this desired end state required successful refactoring and improving of Presto’s already impressive speed, efficiency, reliability, and extensibility. Establishing PrestoDB as a premier Open Source project required a major commitment of time and resources from Meta to ensure the community can benefit from this project for years to come, as well as positioning PrestoDB to evolve beyond what Meta alone could create. Members of the Presto Foundation need more of you to be involved in this major evolution in Presto’s history and core components, and bring your own inventive ideas to the mix.

Presto at Meta: A Guide to Tuning Clusters at Enormous Scale

Facebook operates Presto at an enormous scale. A critical part of the success of Presto is properly tuning the clusters according to the use case they target. Swapnil Tailor, Basar Onat and Tim Meehan describe important session properties and configuration properties used to configure Presto, and guidance on when and how to use them.

Presto on Spark – Facebook – Virtual Meetup

At Facebook, we have spent the past several years in independently building and scaling both Presto and Spark to Facebook scale batch workloads. It is now increasingly evident that there is significant value in coupling Presto’s state-of-art low-latency evaluation with Spark’s robust and fault tolerant execution engine. In this talk, we’ll take a deep dive in Presto and Spark architecture with a focus on key differentiators (e.g., disaggregated shuffle) that are required to further scale Presto.

Building the Presto Open Source Community – Ahana Round Table

In this round table moderated by Eric Kavanagh of The Bloor Group, panelists from Uber, Facebook, Ahana, and Alibaba will discuss all aspects of building a thriving open source community around PrestoDB including why Presto is so popular & the problems it solves, the open source model the foundation follows, why governance and transparency are so important to an open source community, and what the community looks for in open source projects.

Common Sub Expression Optimization at Facebook

In complex analytics queries, we often see repeated expressions, for example parsing the same JSON column but extracting different fields, elaborate CASE statement with common predicates and different ones. Previously, Presto will compute the same expression many times as they appear in query. With common sub expression optimization, we would only evaluate the same expression once within the same project operator or filter operator. In our workload, we’ve seen 3x improvements on certain queries with expensive common sub expressions like JSON_PARSE. Microbenchmark also shows a consistent ~10% performance improvement for simple common sub-expressions like x + y. In this talk, we will talk about how this is implemented.

Architecting Your Data Platform with Presto and Alluxio in Heterogeneous Environment – Adit Madan

As the cloud is evolving and the adoption of a hybrid-cloud or multi-cloud approach grows, the data architecture must adapt to heterogeneous environments. In this talk, Adit Madan shares insights on how to architect a data platform with Presto and Alluxio that provides agility and simplicity to your data team.

Dynamic UDF Framework and its Applications – Rongrong Zhong, Alluxio & Yanbing Zhang, Bytedance

Presto supports dynamically registered User Defined Functions (UDFs) since 2020. Over the years, we used this framework to add support for SQL UDFs and remote / external UDFs. One common community request in the UDF domain is to support Hive UDFs. Many companies have legacy Hive pipelines, and engineers who are familiar with HQL and Hive UDFs. With remote UDF, one can implement Hive UDF support as UDFs running on the remote cluster. But since HiveUDFs are written in Java, we can also run them inside the engine. We extended the dynamic UDF framework to support Java UDFs, and used this new extension to add HiveUDF support in Presto. With this feature, users can directly use their familiar HiveUDFs and UDAFs in their Presto query.

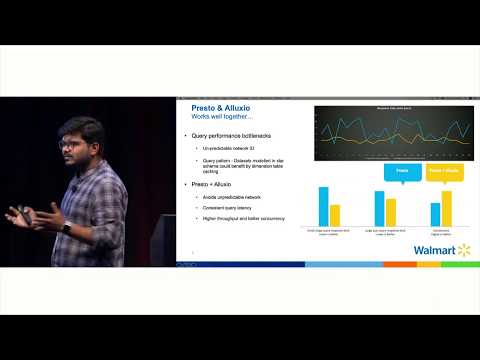

Speed Up Presto at Uber with Alluxio Caching – Chen Liang, Uber & Beinan Wang, Alluxio

At Uber, Presto is heavily used as one of the primary data analytics tools, and Presto’s query performance has profound production impact at Uber. As part of the Presto optimization effort, we turned to explore Alluxio as a caching solution. Alluxio is an open source data orchestration platform often used by many compute frameworks as the caching layer. Alluxio caching is currently enabled on ~2000 nodes across 6 clusters at Uber. In this presentation, we will talk about our journey at Uber of integrating Alluxio cache into Presto. We will discuss the Uber specific challenges we encountered and how we addressed them. We will also present the performance improvements we have seen. Besides, we will also discuss our plan and next steps, and potential future collaboration opportunities with the community.

Presto & the Foundations of Open Lake House: Trends & Opportunities – Biswapesh Chattopadhyay, Meta

Building open and shared foundational tech to build a lake house architecture can provide the best-of-breed user experience across the Analytics and ML domains and potentially beyond. In this talk, Biswa will share examples drawn from the evolution of the data stack at Meta over the last few years including efforts towards dialect unification (Sapphire aka Presto-on-Spark and Xstream-IE streaming engine efforts), eval unification (using Velox as the base), eliminating the need for data duplication for interactive analytics by building smart caching (RaptorX), building a best-of-breed file format that works across Analytics and ML (Alpha), and building an open source ML data pre-proc engine (TorchArrow) which shares the core dialect and eval components with Presto.

Panel Discussion: Presto for the Open Data Lakehouse

Today’s digital-native companies need a modern data infra that can handle data wrangling and data-driven analytics for the ever-increasing amount of data needed to drive business. Specifically, they need to address challenges like complexity, cost, and lock-in. An Open SQL Data Lakehouse approach enables flexibility and better cost performance by leveraging open technologies and formats. Join us for this panel where leading technologists from the Presto open source project will share their vision of the SQL Data Lakehouse and why Presto is a critical component.